1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

| $ lsblk

...

sdt 65:48 0 200G 0 disk

└─mpathb 253:11 0 200G 0 mpath

├─i1666574565-lvmlock 253:13 0 10G 0 lvm

├─i1666574565-pvc--23dc3ecf--37fb--44ee--b8a6--7667a98aeb05 253:15 0 20M 0 lvm

└─i1666574565-pvc--0878818c--5d93--4ed4--b034--5bf37a657a6e 253:16 0 20M 0 lvm /run/kata-containers/shared/sandboxes/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/shared/cc3782d5f7aef968f640a0c161ee027efec8054c9860cae9d7254f8fe0659cce-b00bec5dd6f4958e-datadir

# virtiofs 类型的 Pod

$ mount | grep 3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9

shm on /run/containerd/io.containerd.grpc.v1.cri/sandboxes/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/shm type tmpfs (rw,nosuid,nodev,noexec,relatime,size=65536k)

overlay on /run/containerd/io.containerd.runtime.v2.task/k8s.io/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/rootfs type overlay (rw,relatime,lowerdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/1/fs,upperdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/254839/fs,workdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/254839/work)

tmpfs on /run/kata-containers/shared/sandboxes/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/shared type tmpfs (ro,mode=755)

overlay on /run/kata-containers/shared/sandboxes/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/mounts/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/rootfs type overlay (rw,relatime,lowerdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/1/fs,upperdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/254839/fs,workdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/254839/work)

overlay on /run/kata-containers/shared/sandboxes/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/shared/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/rootfs type overlay (rw,relatime,lowerdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/1/fs,upperdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/254839/fs,workdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/254839/work)

/dev/sda2 on /run/kata-containers/shared/sandboxes/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/mounts/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9-2c4e4dce04926bea-resolv.conf type xfs (ro,relatime,attr2,inode64,noquota)

/dev/sda2 on /run/kata-containers/shared/sandboxes/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/shared/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9-2c4e4dce04926bea-resolv.conf type xfs (ro,relatime,attr2,inode64,noquota)

overlay on /run/kata-containers/shared/sandboxes/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/mounts/cc3782d5f7aef968f640a0c161ee027efec8054c9860cae9d7254f8fe0659cce/rootfs type overlay (rw,relatime,lowerdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/251248/fs,upperdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/254840/fs,workdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/254840/work)

overlay on /run/kata-containers/shared/sandboxes/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/shared/cc3782d5f7aef968f640a0c161ee027efec8054c9860cae9d7254f8fe0659cce/rootfs type overlay (rw,relatime,lowerdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/251248/fs,upperdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/254840/fs,workdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/254840/work)

/dev/mapper/i1666574565-pvc--0878818c--5d93--4ed4--b034--5bf37a657a6e on /run/kata-containers/shared/sandboxes/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/mounts/cc3782d5f7aef968f640a0c161ee027efec8054c9860cae9d7254f8fe0659cce-b00bec5dd6f4958e-datadir type ext4 (rw,relatime,data=ordered)

/dev/mapper/i1666574565-pvc--0878818c--5d93--4ed4--b034--5bf37a657a6e on /run/kata-containers/shared/sandboxes/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/shared/cc3782d5f7aef968f640a0c161ee027efec8054c9860cae9d7254f8fe0659cce-b00bec5dd6f4958e-datadir type ext4 (rw,relatime,data=ordered)

/dev/sda2 on /run/kata-containers/shared/sandboxes/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/mounts/cc3782d5f7aef968f640a0c161ee027efec8054c9860cae9d7254f8fe0659cce-d10c2427b7cfd382-hosts type xfs (rw,relatime,attr2,inode64,noquota)

/dev/sda2 on /run/kata-containers/shared/sandboxes/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/shared/cc3782d5f7aef968f640a0c161ee027efec8054c9860cae9d7254f8fe0659cce-d10c2427b7cfd382-hosts type xfs (rw,relatime,attr2,inode64,noquota)

/dev/sda2 on /run/kata-containers/shared/sandboxes/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/mounts/cc3782d5f7aef968f640a0c161ee027efec8054c9860cae9d7254f8fe0659cce-aec3c5c428ab0e89-termination-log type xfs (rw,relatime,attr2,inode64,noquota)

/dev/sda2 on /run/kata-containers/shared/sandboxes/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/shared/cc3782d5f7aef968f640a0c161ee027efec8054c9860cae9d7254f8fe0659cce-aec3c5c428ab0e89-termination-log type xfs (rw,relatime,attr2,inode64,noquota)

/dev/sda2 on /run/kata-containers/shared/sandboxes/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/mounts/cc3782d5f7aef968f640a0c161ee027efec8054c9860cae9d7254f8fe0659cce-0a63282c5f8f4163-hostname type xfs (rw,relatime,attr2,inode64,noquota)

/dev/sda2 on /run/kata-containers/shared/sandboxes/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/shared/cc3782d5f7aef968f640a0c161ee027efec8054c9860cae9d7254f8fe0659cce-0a63282c5f8f4163-hostname type xfs (rw,relatime,attr2,inode64,noquota)

/dev/sda2 on /run/kata-containers/shared/sandboxes/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/mounts/cc3782d5f7aef968f640a0c161ee027efec8054c9860cae9d7254f8fe0659cce-a9d3c3e132ce299b-resolv.conf type xfs (rw,relatime,attr2,inode64,noquota)

/dev/sda2 on /run/kata-containers/shared/sandboxes/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/shared/cc3782d5f7aef968f640a0c161ee027efec8054c9860cae9d7254f8fe0659cce-a9d3c3e132ce299b-resolv.conf type xfs (rw,relatime,attr2,inode64,noquota)

tmpfs on /run/kata-containers/shared/sandboxes/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/mounts/cc3782d5f7aef968f640a0c161ee027efec8054c9860cae9d7254f8fe0659cce-30db53e3c43cb09f-serviceaccount type tmpfs (ro,relatime,size=32675592k)

tmpfs on /run/kata-containers/shared/sandboxes/3f1316b887ba0cf7b0144a095fb543a262ae0c2b32a94c7658e1dbb3707c5ea9/shared/cc3782d5f7aef968f640a0c161ee027efec8054c9860cae9d7254f8fe0659cce-30db53e3c43cb09f-serviceaccount type tmpfs (ro,relatime,size=32675592k)

# direct 类型的 Pod

$ mount | grep 3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7

shm on /run/containerd/io.containerd.grpc.v1.cri/sandboxes/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7/shm type tmpfs (rw,nosuid,nodev,noexec,relatime,size=65536k)

overlay on /run/containerd/io.containerd.runtime.v2.task/k8s.io/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7/rootfs type overlay (rw,relatime,lowerdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/1/fs,upperdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/254837/fs,workdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/254837/work)

tmpfs on /run/kata-containers/shared/sandboxes/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7/shared type tmpfs (ro,mode=755)

overlay on /run/kata-containers/shared/sandboxes/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7/mounts/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7/rootfs type overlay (rw,relatime,lowerdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/1/fs,upperdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/254837/fs,workdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/254837/work)

overlay on /run/kata-containers/shared/sandboxes/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7/shared/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7/rootfs type overlay (rw,relatime,lowerdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/1/fs,upperdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/254837/fs,workdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/254837/work)

/dev/sda2 on /run/kata-containers/shared/sandboxes/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7/mounts/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7-27977320e8b95e05-resolv.conf type xfs (ro,relatime,attr2,inode64,noquota)

/dev/sda2 on /run/kata-containers/shared/sandboxes/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7/shared/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7-27977320e8b95e05-resolv.conf type xfs (ro,relatime,attr2,inode64,noquota)

overlay on /run/kata-containers/shared/sandboxes/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7/mounts/6da9c84109d97ce2895b3e5b38631ebdf7185fc7b915cf4766572803ce4df379/rootfs type overlay (rw,relatime,lowerdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/251248/fs,upperdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/254838/fs,workdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/254838/work)

overlay on /run/kata-containers/shared/sandboxes/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7/shared/6da9c84109d97ce2895b3e5b38631ebdf7185fc7b915cf4766572803ce4df379/rootfs type overlay (rw,relatime,lowerdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/251248/fs,upperdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/254838/fs,workdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/254838/work)

/dev/sda2 on /run/kata-containers/shared/sandboxes/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7/mounts/6da9c84109d97ce2895b3e5b38631ebdf7185fc7b915cf4766572803ce4df379-b03e8081dab8db44-hosts type xfs (rw,relatime,attr2,inode64,noquota)

/dev/sda2 on /run/kata-containers/shared/sandboxes/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7/shared/6da9c84109d97ce2895b3e5b38631ebdf7185fc7b915cf4766572803ce4df379-b03e8081dab8db44-hosts type xfs (rw,relatime,attr2,inode64,noquota)

/dev/sda2 on /run/kata-containers/shared/sandboxes/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7/mounts/6da9c84109d97ce2895b3e5b38631ebdf7185fc7b915cf4766572803ce4df379-fc067734b16d5aec-termination-log type xfs (rw,relatime,attr2,inode64,noquota)

/dev/sda2 on /run/kata-containers/shared/sandboxes/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7/shared/6da9c84109d97ce2895b3e5b38631ebdf7185fc7b915cf4766572803ce4df379-fc067734b16d5aec-termination-log type xfs (rw,relatime,attr2,inode64,noquota)

/dev/sda2 on /run/kata-containers/shared/sandboxes/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7/mounts/6da9c84109d97ce2895b3e5b38631ebdf7185fc7b915cf4766572803ce4df379-9578923c5ca9be73-hostname type xfs (rw,relatime,attr2,inode64,noquota)

/dev/sda2 on /run/kata-containers/shared/sandboxes/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7/shared/6da9c84109d97ce2895b3e5b38631ebdf7185fc7b915cf4766572803ce4df379-9578923c5ca9be73-hostname type xfs (rw,relatime,attr2,inode64,noquota)

/dev/sda2 on /run/kata-containers/shared/sandboxes/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7/mounts/6da9c84109d97ce2895b3e5b38631ebdf7185fc7b915cf4766572803ce4df379-19d449c66d9d623d-resolv.conf type xfs (rw,relatime,attr2,inode64,noquota)

/dev/sda2 on /run/kata-containers/shared/sandboxes/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7/shared/6da9c84109d97ce2895b3e5b38631ebdf7185fc7b915cf4766572803ce4df379-19d449c66d9d623d-resolv.conf type xfs (rw,relatime,attr2,inode64,noquota)

tmpfs on /run/kata-containers/shared/sandboxes/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7/mounts/6da9c84109d97ce2895b3e5b38631ebdf7185fc7b915cf4766572803ce4df379-fd37e76da5e2d86d-serviceaccount type tmpfs (ro,relatime,size=32675592k)

tmpfs on /run/kata-containers/shared/sandboxes/3763faeb5fc0aa25265b751123b63fbbae1c8aa35bec202c42a4d569c0ef63a7/shared/6da9c84109d97ce2895b3e5b38631ebdf7185fc7b915cf4766572803ce4df379-fd37e76da5e2d86d-serviceaccount type tmpfs (ro,relatime,size=32675592k)

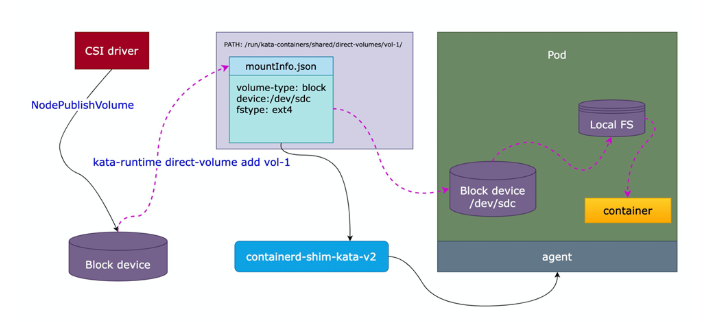

# 卷直通场景下,持久卷的块设备由 CSI 格式化后 attach 到节点后,不会执行 mount 到节点的操作,而是由 Kata Agent 触发 mount 操作,挂载到 VM 中,因此 host 端看不到挂载点信息,所以写在直通卷中的数据不会出现在 host 中

# 两种模式下,rootfs 的挂载点一致

|